Prostate cancer (PCa) is the most common cancer diagnosis and second-leading cause of cancer death in men in the United States1; it is most often treated curatively using radiation therapy (RT) or surgery. External beam RT is a noninvasive treatment option that provides high success rates for tumor control.2

Over the past decade, magnetic resonance imaging (MRI) has played an increasingly important role in the RT workflow.3 Anatomic and functional structures can be visualized using multiparametric MRI (mpMRI), which typically consists of T2-weighted images, diffusion-weighted images, apparent diffusion coefficient maps, and dynamic contrast-enhanced imaging. Although computed tomography (CT) has historically been the mainstay imaging modality in RT, the superior soft tissue contrast of MRI aids in the delineation of prostate tumors and nearby critical structures.4 Within the RT workflow, MRI can be used before or during RT delivery. Before delivery, MRI scanners within radiology or RT departments (also known as MR simulators) can be used to acquire imaging while patients simulate the same position used for treatment to assist in the treatment planning process. During RT, MRI systems can be combined with linear accelerators (linacs), known as MR-linacs, the technology used to deliver photon-based external beam RT. The technology allows for MR monitoring during radiation delivery or MR-guided adaptive RT (MRgART), which facilitates real-time plan adjustments to account for anatomic changes that occur during treatment.5

Abbreviations

CNN convolutional neural network

CT computed tomography

GAN generative adversarial network linac, linear accelerator

mpMRI multiparametric magnetic resonance imaging

MRgART cmagnetic resonance–guided adaptive radiotherapy

MRI magnetic resonance imaging

PCa prostate cancer

RT radiation therapy

Recently, there has been a substantial increase in the application of deep-learning technologies to medical imaging. Specifically, the architecture of convolutional neural networks (CNNs)6 is well suited to image pattern recognition. In brief, images are input into CNNs, where sequential filters are applied, layer by layer, resulting in the downsampling of an image into simplified features. These features can be used to classify the image7 or synthesize a new image through upsampling, which can delineate lesion boundaries or serve as an attenuation map for RT—for example, by using a classic U-Net CNN architecture.8 Generative adversarial networks (GANs) are also effective for image synthesis and work by training 2 neural networks, a generator and a discriminator, to compete against one another to generate images that appear increasingly more real.9 Generative adversarial network variants, such as conditional GAN10 and cycleGAN,11 have also been successful in medical imaging applications. Conditional GANs incorporate labeled training data to produce more accurate results, whereas cycleGANs include 2 GANs that provide feedback to one another and allow for model training of unpaired data. Over the past several years, CNNs, GANs, and their variants have been demonstrated in a wide array of applications, from MRI-based cancer detection to image synthesis.

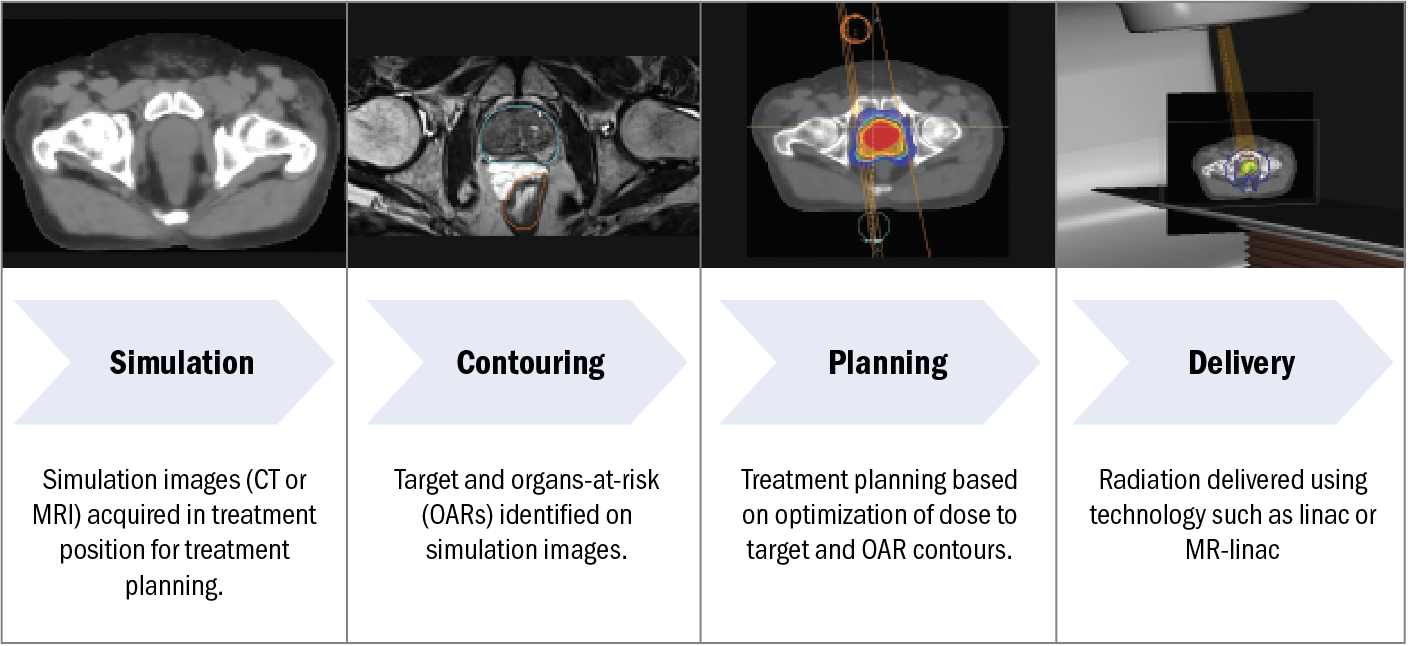

Figure 1. Schematic of the RT workflow, including simulation, contouring, planning, and delivery.

Abbreviations: CT, computed tomography; MR-linac, magnetic resonance linear accelerator; MRI, magnetic resonance imaging; OAR, organs at risk; RT, radiation therapy.

As MRI continues to play a critical role in the administration of RT, deep learning has been applied to each step of the external beam RT workflow, illustrated in Figure 1. First, after consultation with the radiation oncologist, the patient undergoes simulation (in which CT and/or MR images are acquired for RT planning), contouring (in which the target and organs at risk are identified on the simulation images), planning (in which the dose distribution is optimized to achieve optimal target coverage and sparing of organs at risk), and delivery (in which radiation is delivered). In this review, we highlight current deep learning–based applications involving MRI used in external beam RT for PCa.

Simulation

The RT workflow begins with patient simulation, where CT and/or MR images are acquired while the patient is immobilized, using the same setup as for treatment. Simulation images are used for 3 main purposes: (1) to provide anatomic information the physician uses to delineate the tumor and nearby organs at risk, (2) to provide a patient-specific map of relative electron density used for external beam RT dose calculation, and (3) to provide reference images for onboard image alignment.

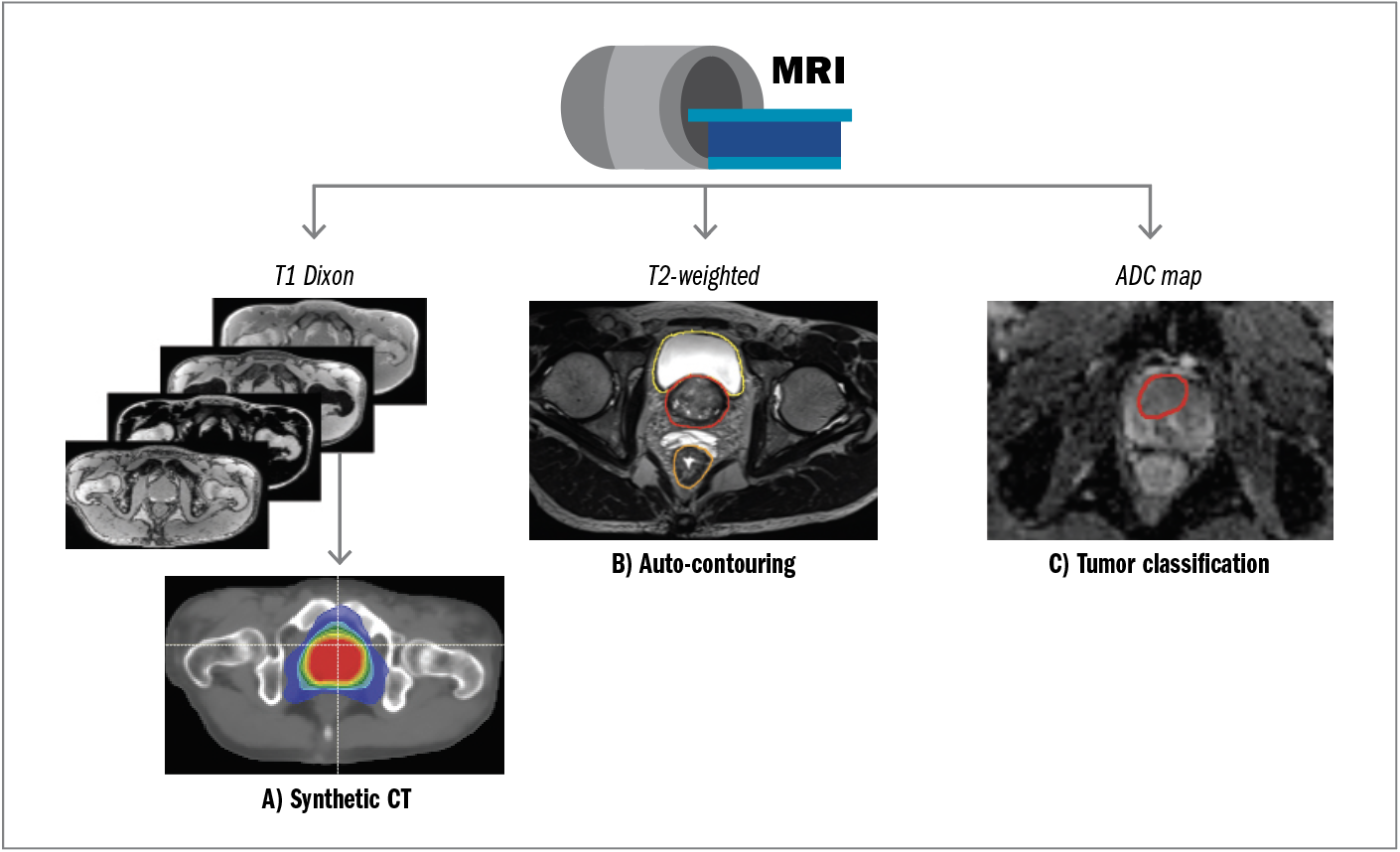

For purposes of dose calculation, CT is an ideal imaging modality because it provides a map of photon attenuation that can be converted to relative electron density. In contrast, MRI provides material magnetic properties but no inherent information about material photon attenuation or electron density. Therefore, traditional external beam RT workflows often use both CT (for dose calculation) and MRI (for anatomic delineation). Removing the CT scan and implementing an MRI-only simulation workflow, however, would be advantageous because it reduces the number of imaging procedures required for each patient and eliminates errors caused by co-registration of CT and MR images. To achieve such a workflow, deep learning has been used to generate synthetic CT images from MR images, as illustrated in Figure 2A.

In this example, T1 Dixon MRI provides fat-only, water-only, in-phase, and out-of-phase images (approximately 3-minute acquisition time on a 3-T MR simulator) that are input into a commercially available dual-architecture algorithm that first uses a CNN for tissue segmentation, followed by a conditional GAN for continuous Hounsfield unit CT generation.12 The model output is a synthetic CT dataset that can be used for dose calculation and treatment planning instead of conventional CT. Synthetic CT generation has also been achieved using single-sequence MRI input13; however, using multiple-input MRI scans provided by the Dixon sequences can increase model robustness. Overall, these MR-only solutions are in the early stages of clinical adoption and have been demonstrated to be an effective and safe RT method for PCa.14

Contouring

In addition to providing data dose calculation, simulation images provide anatomic information the physician can use to identify, or contour, the target and nearby organs at risk. Deep learning has been used to automate the contouring process. Figure 2B provides an example using T2-weighted MRI as input to a deep learning–based autosegmentation algorithm for automatic generation of prostate, bladder, and rectum contours. Autosegmentation algorithms have increasingly been implemented in clinical practice to reduce manual labor and decrease interobserver and intraobserver variability. For example, Cha et al15 reported successful implementation of an in-house deep learning–based autosegmentation algorithm used in MR-based prostate RT planning that improved time savings and required infrequent manual editing.

Magnetic resonance imaging is also superior to CT for visualizing dominant intraprostatic lesions. Although external beam RT has typically been employed through a uniform dose to the entire prostate gland, focal boosting is a strategy that delivers additional higher doses to dominant intraprostatic lesions, which present as hypointense lesions on apparent diffusion coefficient maps, as shown in Figure 2C. Published in January 2021, the multi-institutional phase 3 Investigate the Benefit of a Focal Lesion Ablative Microboost in Prostate Cancer (FLAME) trial (ClinicalTrials.gov identifier NCT01168479) reported statistically significant (P < .001) improvement in biological disease-free survival when adding a focal boost to the macroscopic tumor visible on mpMRI (5-year follow-up of 92% in the focal boost arm and 85% in the standard arm) without affecting toxicity and quality of life for patients with intermediate-risk and high-risk PCa.16 Although mpMRI is routinely used to detect and stage PCa, delineation of dominant intraprostatic lesions is not yet common practice, and there have been several reports of large intraobserver and interobserver variability when performing this task.17,18 To overcome this challenge, deep learning–based approaches for tumor classification have been implemented for autosegmentation of dominant intraprostatic lesions. Bagher-Ebadian et al19 achieved high performance in predicting Gleason scores of at least 3 + 4 dominant intraprostatic lesions by using an adaptive neural network based on radiomic features extracted from T2-weighted and apparent diffusion coefficient maps. Simeth et al20 assessed volumetric dominant intraprostatic lesion segmentation accuracy across 5 deep learning networks and found that a multiple-resolution residually connected network regularized with deep supervision implemented in the last convolutional block was the most generalizable across different MR scanners.

Figure 2. An example of an MRI simulation protocol that uses deep learning for (A) generating synthetic CT images for MR-only treatment planning; (B) autocontouring of organs at risk on T2-weighted MRI; and (C) autoclassification of dominant intraprostatic lesions for focal boosting using apparent diffusion coefficient maps.

Abbreviations: ADC, apparent diffusion coefficient; CT, computed tomography; MRI, magnetic resonance imaging.

Planning

After simulation and contouring, RT dose distribution is optimized during treatment planning to achieve prescription target coverage and sparing of nearby organs at risk. In the case of prostate external beam RT, this optimization is achieved through an objective function that searches the space of possible linac delivery parameters, such as gantry angle, monitor units, and multileaf collimator positioning, to calculate a fluence map that achieves an optimal dosimetric trade-off between target coverage and dose sparing of critical structures.21 In conventional workflows, this process is executed through manual adjustments to the objective function by treatment planners. It is time intensive and prone to human errors and can result in variable quality across planners.

Automatic, deep learning–based treatment planning approaches have been explored to mitigate these limitations. For example, CNN models trained on input patient geometry and prescription doses have predicted dose distributions that are similar to their manually planned counterparts, suggesting that deep learning can be used to guide treatment planning.22 In addition to dose distribution predictions, full automation of treatment planning has been explored. Shen et al23 proposed a hierarchical intelligent automatic treatment planning framework that consists of 3 separate networks to emulate the decision-making processes of human planners. Hrinivich et al24 developed a reinforcement learning approach to rapidly and automatically generate deliverable PCa RT plans using multiparameter optimization. Attempts to address issues with generalizability across different clinicians and institutions have been explored through transfer learning, or the process of updating models with new or different data,25 although generalizability remains an ongoing challenge in all deep learning applications.

Delivery

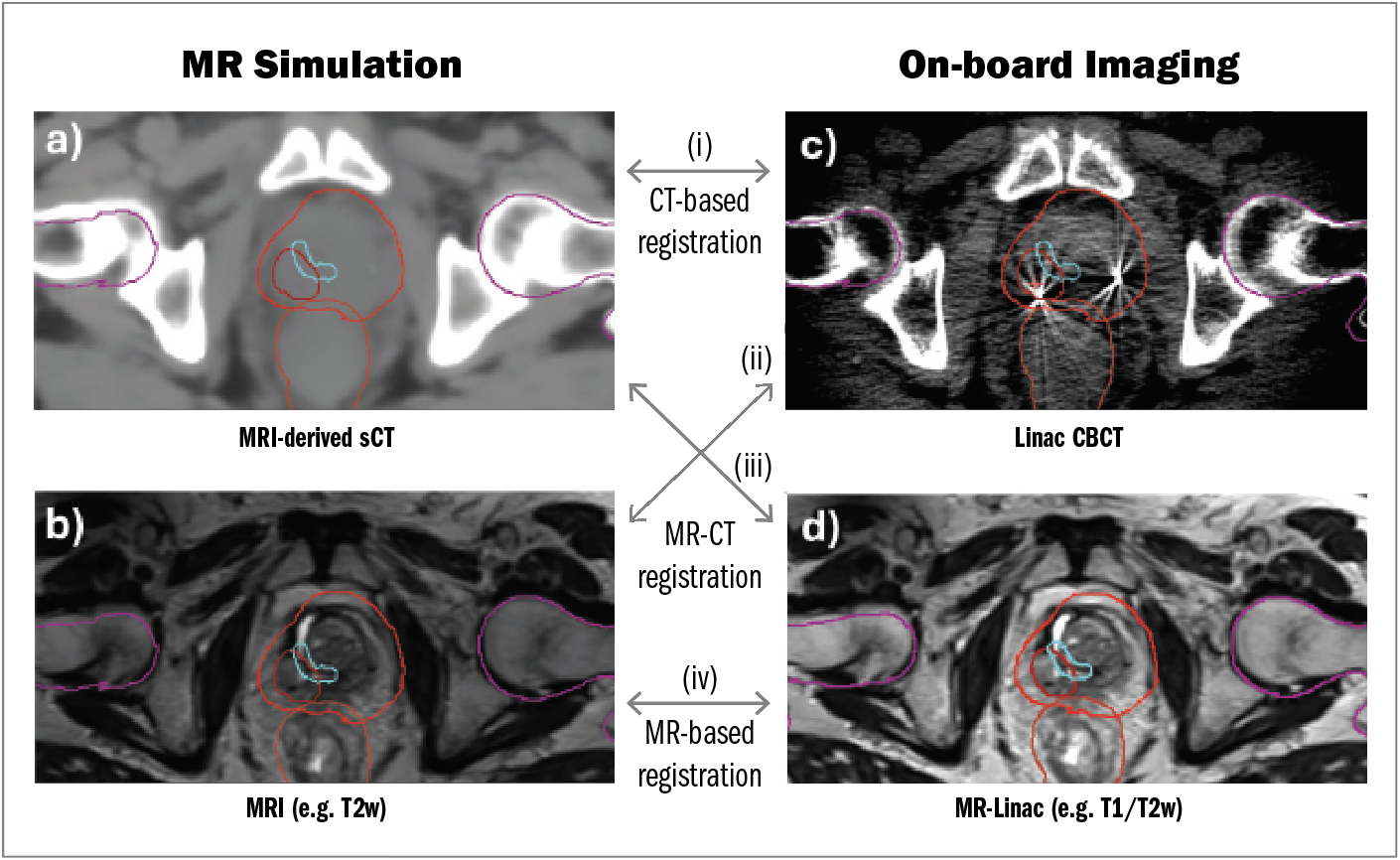

In the context of MR-guided external beam RT, pretreatment imaging may include an MRI-derived synthetic CT (Figure 3A) or an MRI (Figure 3B), while delivery can be achieved by using a traditional linac with onboard cone beam CT (Figure 3C) or, in the case of MR-linacs, by using MRI guidance (Figure 3D).

Simulation imaging (Figure 3A and 3B) is compared with onboard imaging (Figure 3C and 3D) for daily patient alignment and can be automated through deep learning–based image registration. A comprehensive review of deep learning for medical image registration is reported by Fu et al,26 with brief descriptions provided here:

- Synthetic CT to cone beam CT registration. Synthetic CT and cone beam CT share similar image intensity distributions and are often co-registered through optimization of intensity-based and mutual information–based metrics. To mitigate challenges related to noise levels and reduced field of view in cone beam CT images, reinforcement learning has been applied in which registration metrics and strategies are updated during optimization to improve robustness and accuracy.27 A practical consideration for synthetic CTs, however, is that implanted fiducial markers do not appear in synthetic CT images and must be accounted for if used for fiducial-based alignment.28

- MR simulation to cone beam CT image registration. Multimodal MRI to cone beam CT image registration may be desirable for alignment of MR simulation images to linac-based cone beam CT for tasks such as improving delineation, planning, and dose monitoring of dominant intraprostatic lesions based on mpMRI. For example, multimodal registration has been performed by CNN-based segmentation of MR and cone beam CT images, followed by the application of a 3-dimensional point cloud matching network,29 which outperformed other techniques for non–deep learning–based MR to cone beam CT image registration.

- Synthetic CT to MR-linac image registration. MRI-generated synthetic CT images can effectively replace CT images for purposes of dose calculation in prostate RT to streamline MRgART approaches that currently require CT image acquisition. In this workflow, however the synthetic CT image is purely used for dose calculation because unimodal (MR to MR) image registration is generally preferred over multimodal (synthetic CT to MR) image registration.

- MR simulation to MR-linac image registration. MR to MR image registration may be desirable when aligning patients based on anatomy best visualized using MRI, such as the dominant intraprostatic lesion, or for real-time target tracking during delivery. For the former situation, nonrigid registration can be achieved by building a statistical deformation model, with principal component analysis used to construct a deformation vector field.30 Magnetic resonance prostate images were found to be accurately co-registered when using this technique (dice scores of 0.87 and 0.80 for 2-dimensional and 3-dimensional images, respectively). For real-time target tracking, deep learning models have been developed to achieve fast, deformable image registration using 2-dimensional cine-MR images as inputs and motion vector fields as outputs.31 This approach outperformed conventional registration approaches in the thorax and abdomen and could be similarly applied in the prostate setting.

Figure 3. Examples of co-registration between MR simulation data obtained by (A) MR-derived synthetic CT or (B) MRI and onboard imaging obtained by (C) linac cone beam CT or (D) MR-linac. Unimodal co-registration can be achieved through CT-based registration (ie, synthetic CT to cone beam CT) or MR-based registration (ie, MRI to MR-linac). Multimodal co-registration can be achieved through MR-CT registration.

Abbreviations: CBCT, cone beam computed tomography; CT, computed tomography; linac, linear accelerator; MR-linac, magnetic resonance linear accelerator; MRI, magnetic resonance imaging; sCT, synthetic computed tomography.

A major challenge of MRgART is that it is time intensive, requiring a mean of 45 minutes for delivery vs the approximately 10 minutes required for nonadaptive approaches on a conventional linac.32 Opportunities to increase workflow efficiency include decreasing MRI acquisition time and automating the contouring and treatment planning required for each delivery.

Image acquisition can be sped up substantially by undersampling the MRI and improving the resulting image quality using deep learning. For example, Zhu et al33 proposed a technique whereby undersampled MR data were improved through a data augmentation approach using a model pretrained with patient-specific MRI simulation data. This approach reduced the total time for image generation by 66% and improved image registration accuracy.

In addition to the deep learning–based autocontouring approaches described previously, other strategies take advantage of the large number of MR images acquired for each patient on an MR-linac. For example, Fransson et al34 developed a patient-specific autocontouring network trained on the MR images acquired during the first fraction delivery and updated with each subsequently acquired MR dataset.

Conclusion

Magnetic resonance imaging is increasingly being used in the treatment of PCa with external beam RT. Relative to CT, mpMRI provides excellent soft tissue contrast and can be used to contour the prostate gland, dominant intraprostatic lesion, and nearby critical structures for MR-based treatment planning or for MRgART. Deep learning architectures, such as CNNs and GANs, are well suited for image-based tasks and have been applied to image classification and synthesis.

For external beam RT specifically, CNNs and GANs have been used to synthesize CT images from MRI to facilitate MR-only treatment planning, resulting in fewer imaging procedures required of patients and a reduction in errors caused by multimodal image registration. Deep learning–based autocontouring methods are increasingly used in the clinic to identify tumors and critical structures. Treatment planning automation has been achieved using deep learning strategies designed to emulate human treatment planners. Finally, deep learning has been applied to several aspects of the treatment delivery stage, including image registration and MRI acceleration. For example, deep learning–based automatic image registration has been demonstrated for MR to CT–, MR to MR–, and synthetic CT to CT–based co-registration, depending on the type of imaging used for simulation and treatment. To overcome challenges of long treatment time for MRgART, deep learning approaches have been used for both autocontouring of daily anatomy and reconstruction of undersampled MRI data.

Continued advances in deep learning, such as transformer and graph architectures, and generative techniques, such as score matching and diffusion models, are expected to continue to improve the efficiency and efficacy of prostate RT workflows. Limitations of deep learning–based approaches include data scarcity, particularly for RT imaging datasets, which are often much smaller than radiology datasets. To address limitations in dataset sizes while adhering to regulations for Health Insurance Portability and Accountability Act compliance, security, and patient privacy, federated learning has been explored to facilitate the sharing of model parameters instead of patient data. Federated learning has been successfully implemented for multi-institutional MRI-based detection of PCa,35 with similar paradigms possible for image generation, autocontouring, and autoplanning.

References

1. Siegel RL, Giaquinto AN, Jemal A. Cancer statistics, 2024. CA Cancer J Clin. 2024;74(1):12-49. doi:10.3322/caac.21820

2. Widmark A, Klepp O, Solberg A, et al. Endocrine treatment, with or without radiotherapy, in locally advanced prostate cancer (SPCG-7/SFUO-3): an open randomised phase III trial. Lancet. 2009;373(9660):301-308. doi:10.1016/S0140-6736(08)61815-2

3. Chandarana H, Wang H, Tijssen R, Das IJ. Emerging role of MRI in radiation therapy. J Magn Reson Imaging. 2018;48(6):1468-1478. doi:10.1002/jmri.26271

4. Villeirs GM, De Meerleer GO. Magnetic resonance imaging (MRI) anatomy of the prostate and application of MRI in radiotherapy planning. Eur J Radiol. 2007;63(3):361-368. doi:10.1016/j.ejrad.2007.06.030

5. Pathmanathan AU, van As NJ, Kerkmeijer LGW, et al. Magnetic resonance imaging-guided adaptive radiation therapy: a “game changer” for prostate treatment? Int J Radiat Oncol Biol Phys. 2018;100(2):361-373. doi:10.1016/j.ijrobp.2017.10.020

6. Gu J, Wang Z, Kuen J, Ma L, et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018;77:354-377. doi:10.1016/j.patcog.2017.10.013

7. Li Q, Cai W, Wang X, Zhou Y, Feng DD, Chen M. Medical image classification with convolutional neural network. In: 2014 13th International Conference on Control Automation Robotics & Vision (ICARCV). IEEE; 2014:844-848. doi:10.1109/ICARCV.2014.7064414

8. Han X. MR‐based synthetic CT generation using a deep convolutional neural network method. Med Phys. 2017;44(4):1408-1419. doi:10.1002/mp.12155

9. Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y. Generative adversarial nets. In: Advances in Neural Information Processing Systems 27 (NIPS 2014). NeurIPS. 2014;27.

10. Emami H, Dong M, Nejad-Davarani SP, Glide-Hurst CK. SA-GAN: Structure-aware GAN for organ-preserving synthetic CT generation. In: Medical Image Computing and Computer Assisted Intervention–MICCAI 2021. Springer; 2021. doi:10.1007/978-3-030-87231-1_46

11. Scholey J, Vinas L, Kearney V, et al Improved accuracy of relative electron density and proton stopping power ratio through cycleGAN machine learning. Phys Med Biol. 2022;67(10): 10.1088/1361-6560/ac6725. doi:10.1088/1361-6560/ac6725

12. Hoesl M, Corral NE, Mistry N. MR-based synthetic CT. An AI-based algorithm for continuous Hounsfield units in the pelvis and brain—with syngo.via RT image suite. In: MReadings: MR in RT. 8th ed. Siemens Healthineers; 2022:30-42.

13. Scholey JE, Rajagopal A, Vasquez EG, Sudhyadhom A, Larson PEZ. Generation of synthetic megavoltage CT for MRI‐only radiotherapy treatment planning using a 3D deep convolutional neural network. Med Phys. 2022;49(10):6622-6634. doi:10.1002/mp.15876

14. Tenhunen M, Korhonen J, Kapanen M, et al. MRI-only based radiation therapy of prostate cancer: workflow and early clinical experience. Acta Oncologica. 2018;57(7):902-907. doi:10.1080/0284186X.2018.1445284.

15. Cha E, Elguindi S, Onochie I, Gorovets D, Deasy JO, Zelefsky M, Gillespie EF. Clinical implementation of deep learning contour autosegmentation for prostate radiotherapy. Radiother Oncol. 2021;159:1-7. doi:10.1016/j.radonc.2021.02.040

16. Kerkmeijer LGW, Groen VH, Pos FJ, et al. Focal boost to the intraprostatic tumor in external beam radiotherapy for patients with localized prostate cancer: results from the FLAME randomized phase III trial. J Clin Oncol. 2021;39(7):787-796. doi:10.1200/JCO.20.02873

17. Bratan F, Niaf E, Melodelima C, Chesnais AL, Souchon R, Mège-Lechevallier F, Colombel M, Rouvière O. Influence of imaging and histological factors on prostate cancer detection and localisation on multiparametric MRI: a prospective study. Eur Radiol. 2013;23(7):2019-2029. doi:10.1007/s00330-013-2795-0

18. Jung SI, Donati OF, Vargas HA, Goldman D, Hricak H, Akin O. Transition zone prostate cancer: incremental value of diffusion-weighted endorectal MR imaging in tumor detection and assessment of aggressiveness. Radiology. 2013;269(2):493-503. doi:10.1148/radiology.13130029

19. Bagher-Ebadian H, Janic B, Liu C, et al. Detection of dominant intra-prostatic lesions in patients with prostate cancer using an artificial neural network and MR multi-modal radiomics analysis. Front Oncol. 2019;9:1313. doi:10.3389/fonc.2019.01313

20. Simeth J, Jiang J, Nosov A, et al. Deep learning‐based dominant index lesion segmentation for MR‐guided radiation therapy of prostate cancer. Med Phys. 2023;50(8):4854-4870. doi:10.1002/mp.16320

21. Webb S. Intensity-Modulated Radiation Therapy. CRC Press; 2015.

22. Nguyen D, Long T, Jia X, et al. A feasibility study for predicting optimal radiation therapy dose distributions of prostate cancer patients from patient anatomy using deep learning. Sci Rep. 2019;9(1):1076. doi:10.1038/s41598-018-37741-x

23. Shen C, Chen L, Jia X. A hierarchical deep reinforcement learning framework for intelligent automatic treatment planning of prostate cancer intensity modulated radiation therapy. Phys Med Biol. 2021;66(13):10.1088/1361-6560/ac09a2. doi:10.1088/1361-6560/ac09a2.

24. Hrinivich WT, Bhattacharya M, Mekki L, et al. Clinical VMAT machine parameter optimization for localized prostate cancer using deep reinforcement learning. Med Phys. 2024;51(6):3972-3984. doi:10.1002/mp.17100

25. Kandalan RN, Nguyen D, Rezaeian NH, et al. Dose prediction with deep learning for prostate cancer radiation therapy: model adaptation to different treatment planning practices. Radiother Oncol. 2020;153:228-235. doi:10.1016/j.radonc.2020.10.027

26. Fu Y, Lei Y, Wang T, Curran WJ, Liu T, Yang X. Deep learning in medical image registration: a review. Phys Med Biol. 2020;65(20):20TR01. doi:10.1088/1361-6560/ab843e

27. Liao R, Miao S, de Tournemire P, et al. An artificial agent for robust image registration. Proc AAI Conf AI. 2017;31(1). doi:10.1609/aaai.v31i1.11230

28. Singhrao K, Zubair M, Nano T, Scholey JE, Descovich M. End‐to‐end validation of fiducial tracking accuracy in robotic radiosurgery using MRI‐only simulation imaging. Med Phys. 2024;51(1):31-41. doi:10.1002/mp.16857

29. Fu Y, Wang T, Lei Y, et al. Deformable MR‐CBCT prostate registration using biomechanically constrained deep learning networks. Med Phys. 2021;48(1):253-263. doi:10.1002/mp.14584

30. Krebs J, Mansi T, Delingette H, et al. Robust non-rigid registration through agent-based action learning. In: Medical Image Computing and Computer Assisted Intervention−MICCAI 2017. Springer; 2017:344-352. doi:10.1007/978-3-319-66182-7_40

31. Hunt B, Gill GS, Alexander DA, et al. Fast deformable image registration for real-time target tracking during radiation therapy using cine MRI and deep learning. Int J Radiat Oncol Biol Phys. 2023;115(4):983-993.

32. Tocco BR, Kishan AU, Ma TM, Kerkmeijer LG, Tree AC. MR-guided radiotherapy for prostate cancer. Front Oncol. 2020;10:616291. doi:10.3389/fonc.2020.616291

33. Zhu J, Chen X, Liu Y, et al. Improving accelerated 3D imaging in MRI-guided radiotherapy for prostate cancer using a deep learning method. Radiat Oncol. 2023;18(1):108. doi:10.1186/s13014-023-02306-4

34. Fransson S, Tilly D, Strand R. Patient specific deep learning based segmentation for magnetic resonance guided prostate radiotherapy. Phys Imaging Radiat Oncol. 2022;23:38-42. doi:10.1016/j.phro.2022.06.001

35. Rajagopal A, Redekop E, Kemisetti A, et al. Federated learning with research prototypes: application to multi-center MRI-based detection of prostate cancer with diverse histopathology. Acad Radiol. 2023;30(4):644-657. doi:10.1016/j.acra.2023.02.012

Article Information

Published: December 13, 2024.

Conflict of Interest Disclosures: The authors have nothing to disclose.

Funding/Support: None.

Author Contributions: J. Scholey (first author) was responsible for writing the manuscript and creating all figures. A. Rajagopal provided deep learning expertise. J. Hong provided clinical and medical expertise on MRI for PCa. P. Larson provided MRI science expertise. K. Sheng (senior author) provided RT planning expertise.

Data Availability Statement: No new data were generated for this article.